April 11, 2023

Deep learning model

Deep learning model

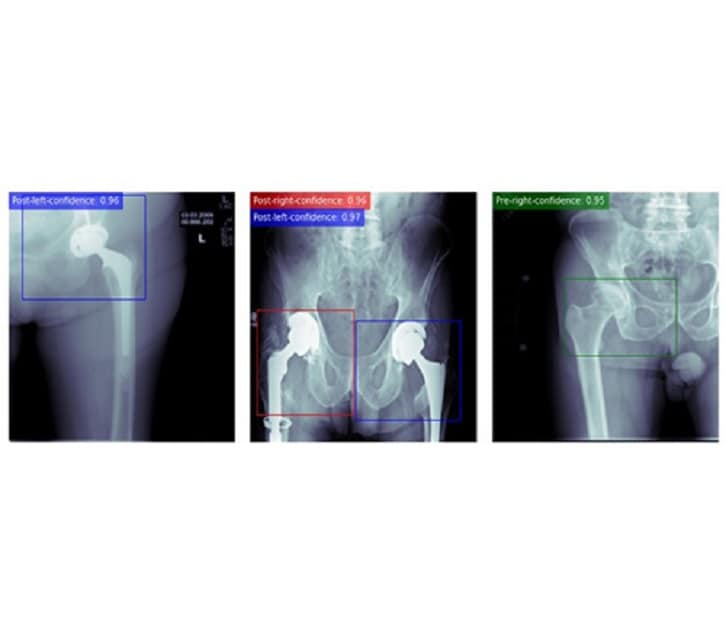

A deep learning model was developed to detect hip joints on anterior-posterior hip and pelvis radiographs. The model can determine the laterality of the joint as well as the presence or absence of the implant in the detected joint. Furthermore, the model will fit a bounding box around the detected joint, which can be used to crop the radiograph for later use cases.

Three years ago, Cody C. Wyles, M.D., an orthopedic surgeon at Mayo Clinic in Minnesota, noted artificial intelligence (AI) was coming of age in medical disciplines. He observed the development of AI at Mayo Clinic, which piqued his interest in developing AI tools with orthopedic clinical applicability.

"AI allows us to answer questions in new ways and offers insights into problems we've plateaued in trying to solve for many years," says Dr. Wyles. "It allows us to treat our patients in a more personalized way."

He explains that he has partnered with amazing data scientists at Mayo Clinic to solve orthopedic problems. Over time, he became versed in data science and computer science. His AI research team began small.

"It started with two or three of us meeting on nights and weekends to do proof-of-concept studies," he says. "Now, we have an orthopedic artificial intelligence lab with eight surgeons, four data scientists, radiologists and a lab manager."

Currently the lab is developing orthopedic surgery-focused AI tools. Even as a newer lab, a few of its projects have made their way into the clinic, says Dr. Wyles. He says that radiology has proven to be one of the most powerful disciplines in which to use AI. Key applications for which the lab is using AI include:

Annotation.The research group has found that AI can automatically annotate features physicians care about most in an X-ray.

"This is very time-consuming for humans and prone to problems," Dr. Wyles says.

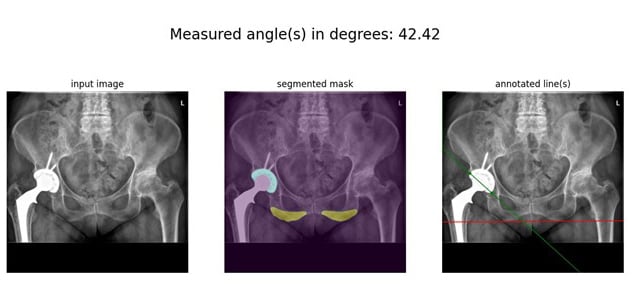

A deep learning pipeline for measuring acetabular inclination angle(s)

A deep learning pipeline for measuring acetabular inclination angle(s)

A deep learning pipeline for measuring acetabular inclination angle(s) on anterior-posterior pelvic radiographs of patients with total hip arthroplasty. A segmentation model will first segment the ischial tuberosities and the implant cup, and then an image processing algorithm will process the segmentation masks to yield the desired angle(s).

AI also has been useful for documenting angles. If the patient has an implant, AI algorithms applied to imaging will indicate if that implant has changed position. Dr. Wyles says that imaging results with AI algorithms applied can be more accurate and efficient than if a human had documented the angles.

"If we documented all this individually for each patient, it would take significant time per patient," Dr. Wyles says. "Multiplied by all the patients for whom we need documented angles, this would be an enormous amount of time."

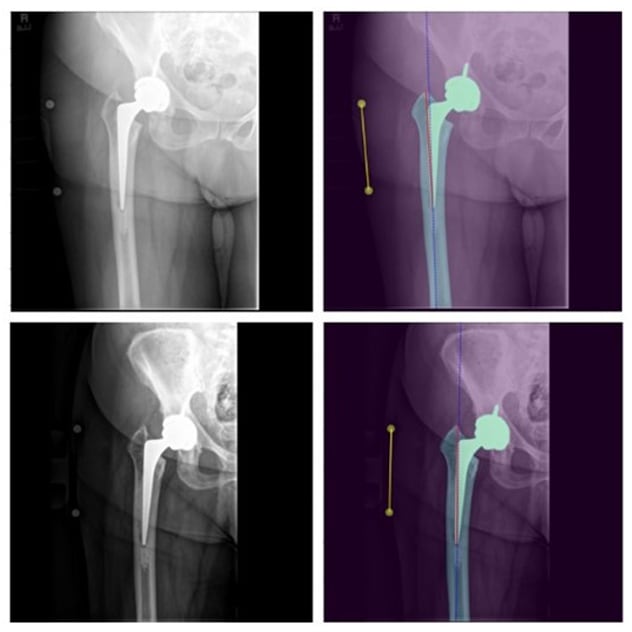

A deep learning pipeline to measure stem subsidence

A deep learning pipeline to measure stem subsidence

A deep learning pipeline to measure stem subsidence on two successive postoperative anterior-posterior hip radiographs of a patient with total hip arthroplasty. First, a segmentation algorithm will segment femur, implant and magnification markers on both radiographs. Next, an image processing pipeline will process the segmentation masks to measure the distance between the superior aspect of the greater trochanteric bone and tip of the stem. This distance is then corrected based on the radiograph magnification and then compared with the same distance on the next radiograph. The difference of the two distances will be reported as the subsidence level.

In hip surgery, imaging enabled with AI significantly shortens the time required to document the hip cups' positions. For a group of 15,000 patients, Dr. Wyles noted the AI algorithm ran in eight hours what would have taken 2 to 3 humans six months, completing a mundane task. Thus, using AI applications saves time for clinicians.

AI also provides tremendous radiologic follow-up benefits, delineating similarities and differences in the patient's structural status over time. This helps especially as routine X-rays are not all completed at the same medical center by the same personnel, thus they may be slightly different.

Risk prediction. Dr. Wyles and his team also have found AI useful for risk prediction. "The technology can screen the X-ray and say, 'Mrs. Johnson's bone structure looks identical to three years ago,' " says Dr. Wyles.

"Then we get Mrs. Anderson, and the AI screens her recent X-ray and points out something has changed, and it points to where this change occurred."

He says that looking at two X-rays side by side, the AI algorithms can detect measurements of even less than 0.1 millimeter of movement in the patient's anatomy.

"This can show you when a problem is brewing," says Dr. Wyles. "It's also actionable: If these algorithms showed slight movement, the orthopedic surgeon might require more-frequent X-rays and check the patient's symptoms, such as pain level."

He also indicates that through screening regular X-rays, AI also can determine if any bone loss is present or if the patient's bone quality has decreased.

"We are trying to get as much information as possible through our regular routine with X-rays," says Dr. Wyles. "X-rays are our bread-and-butter tool in radiology."

Natural language processing. Tools enabled with AI can extract information from clinical notes in free-text form, including typed or dictated notes. These tools also look for complications that might affect the patient's case, such as a draining wound.

"It tips me off that there might be a risk of infection or tells me about a comorbidity such as Parkinson's that might have an impact on the patient," says Dr. Wyles.

Dr. Wyles says this function — noting comorbidities — is especially important as medical records often do not talk to one another. The AI-enabled tool can offer information that providers would not have through reading notes external to the institution or through what they obtain in a patient interview.

Clinical registry augmentation. In this function, AI-enabled algorithms can extract information researchers are seeking, such as which patients have undergone a specific type of joint replacement.

Implications of the AI capabilities

All these orthopedics-related AI capabilities represent tools developed at Mayo Clinic in Minnesota. They offload work humans typically perform, freeing them up for other tasks.

"AI-enabled tools such as risk prediction or annotation are not to replace a physician," Dr. Wyles says. "The physicians are critical to interpreting information provided by these capabilities and making decisions. Rather than replacing a radiologist or orthopedic surgeon, the tools make these providers more effective."

Dr. Wyles says he and his lab have been refining and testing AI tools over the years. The first goal is to improve patient quality of care.

He says these AI capabilities offer benefits to patients referred to Mayo Clinic Orthopedic Surgery, such as offering the ability to make the best patient-specific decisions and conduct detailed follow-up. He says Mayo Clinic orthopedic surgeons are dedicated to follow patients for life.

Future of Mayo Clinic's AI-enabled orthopedics efforts

Dr. Wyles feels positive about the AI work the team has completed thus far and expects significant future advances.

"We are new to this — our lab and its efforts have been ongoing for three years — but we have a big team and a ton of momentum," he says. "We want to lead in this space and are poised to do so with the necessary resources and infrastructure."

He indicates that the AI algorithms his lab is working with cannot simply be created from scratch. The data repositories Mayo Clinic has built over decades are key to developing AI algorithms.

"We have an unusual amount of data collected at Mayo Clinic," he says. "We have the ingredients to move ahead in the AI sphere in orthopedics."

For more information

Refer a patient to Mayo Clinic.